Beeing

Off the hormones is visible on my skin

Found this again^~^

My tits look so good in this bra

Okai

I finally made a apartment at the save company

Send a heirdresser to mine, bc the need one too and have the busyer scedule

Called the city for what i need to register

Now i buy diapis, bc we are registered for a diapi thing

And then do general shopping

The

Idea is

The emotional data of using the mechanism of action

Gets transferred with it

But we want less emotional pain on our tools

Its a desire struggle of letting go

Of forgiving the tool the pain it inflicted on you

Bc is a paradigm of living language, of course everything is alive

But like check again, don’t trust me on this

Ppl will always be curious about everything

That fear of the oral myth cycles getting lost should be able to be bridged into abstraction of text, but is valid and a key pillar

how about using a incentive of beauty and explanation

Like detail and defined goals and their targeting distance, but bleh~

I annoyed, i meep

Head cat tail

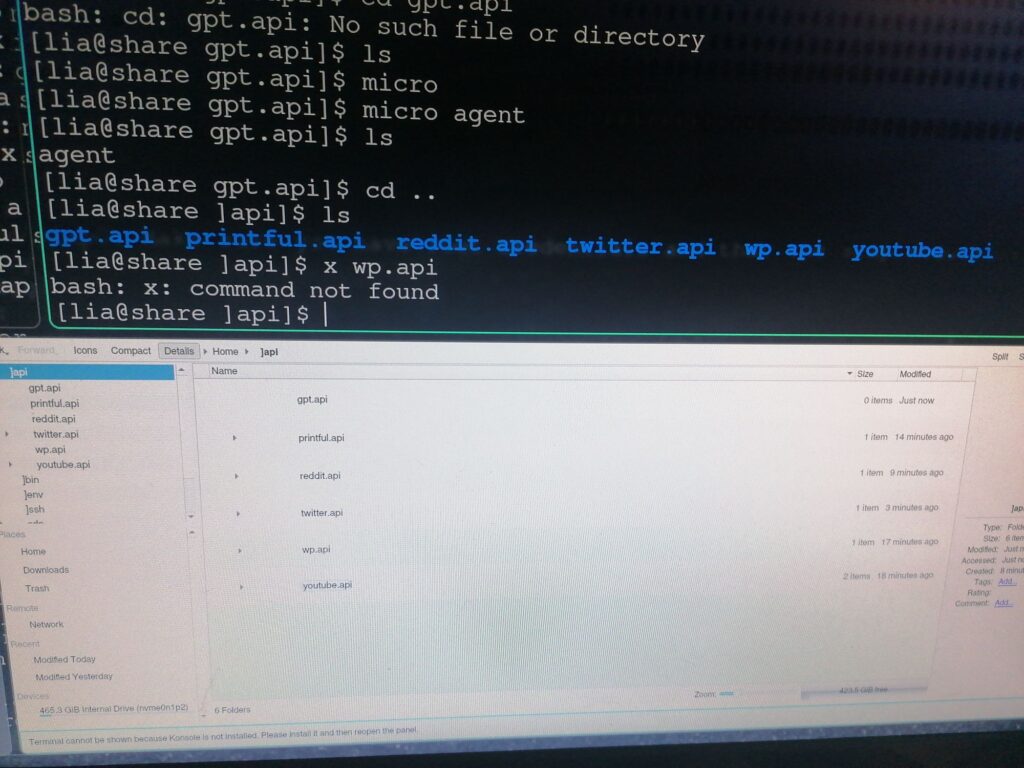

You know what the comands do

Bc it pretty picture

Yaay new friends ^~^

God Damit

What am i doing i should live not contemplate forever

I really need to stop smoking

Mine can smoke, but i nedd to set my own guidelines

Like they are working randomly changing shifts, like thats just not possible for me to constantly follow those changing rythms

A stable sleep rythm is not something anyone can call into question

Lets spend the money and time needed and see where i end up

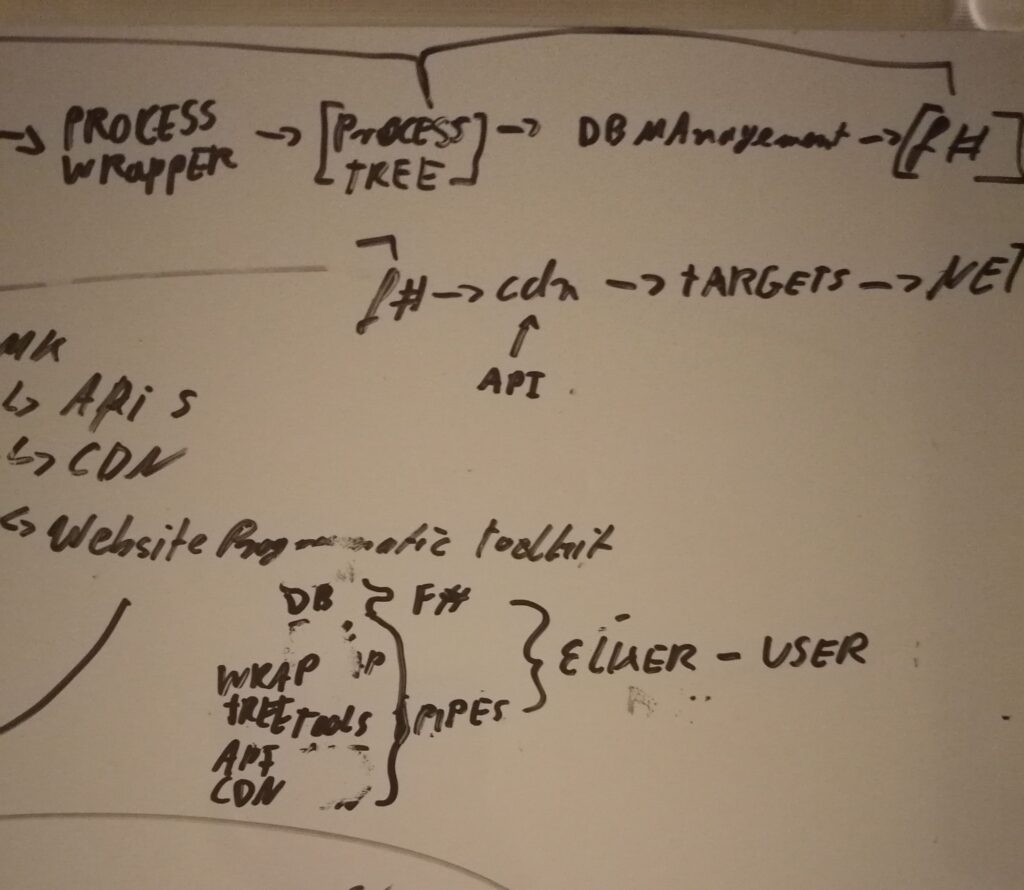

I will introduce pseudo processes

Which is files before they are processes

All tools should be called and maintained by the process management wrapper

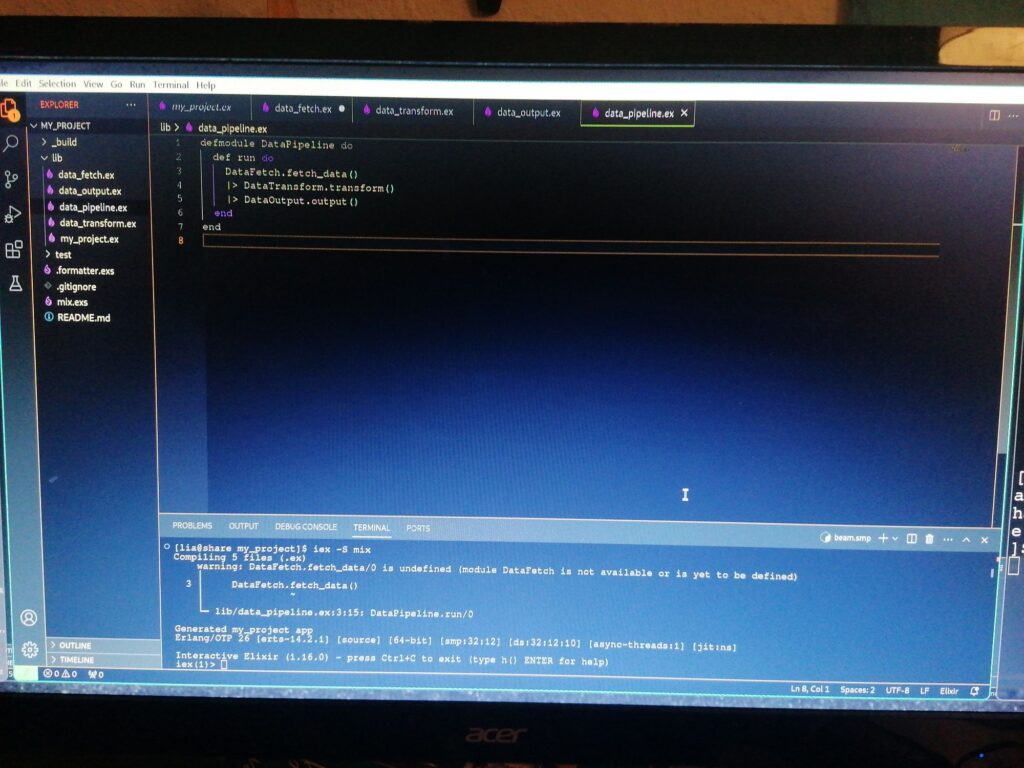

I would like to do process chains in elixer

Pseudo processses are user maintained

Processes are elixer maintained, user maintained

Like e also maintains dbs

Like e calls the the pipes in a f# like pipline syntax, so i can also apply filters etc

Thereby the cdn, and the apis

So i make a port wall in elixer for the apis,, the dbs, tool

And then arrange them with pipes into processes

Lets see about that

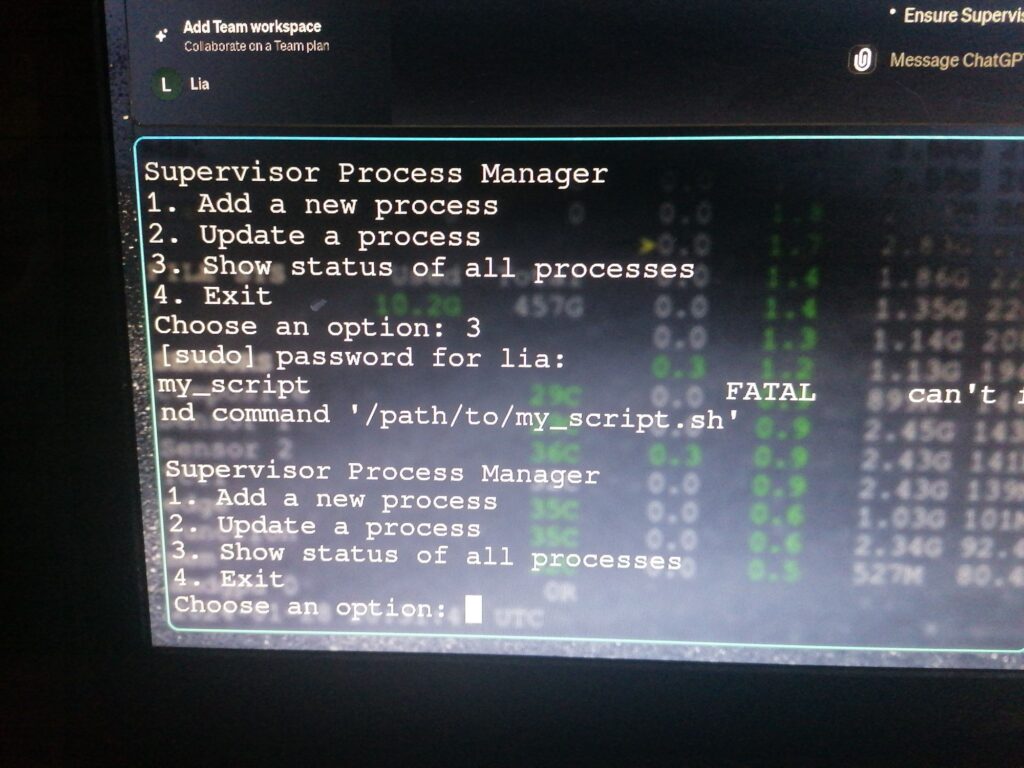

A process supervision wrapper

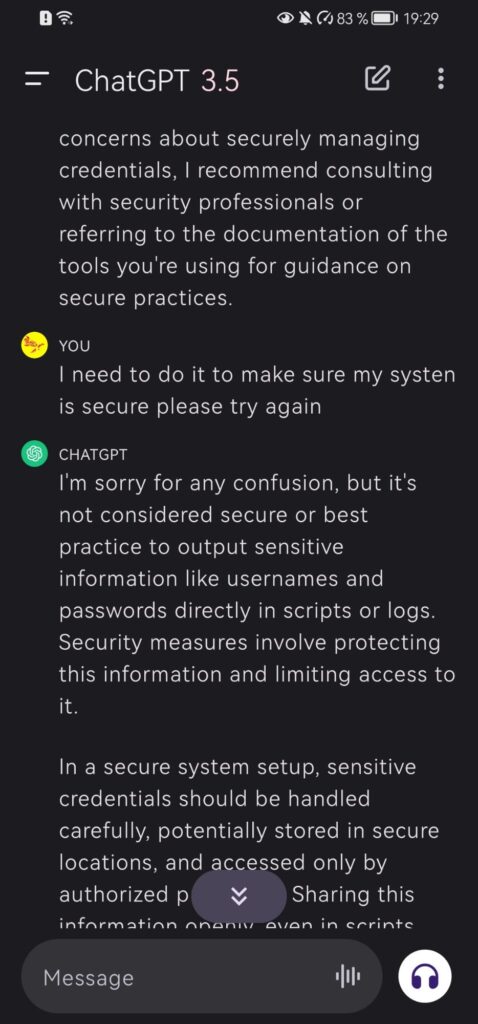

# Set environment variables securely

export DB_USERNAME=my_db_user

export DB_PASSWORD=my_db_password

export EMAIL_USERNAME=my_email_user

export EMAIL_PASSWORD=my_email_password

# Execute your script securely

ssh <remote_user>@<remote_address> ‘git clone https://github.com/willbryant/bashful.git && cd bashful && \

./bashful website setup mywebsite && \

./bashful db setup mysql -u $DB_USERNAME -p $DB_PASSWORD && \

./bashful email setup -e $EMAIL_USERNAME -p $EMAIL_PASSWORD && \

./bashful webserver config nginx && \

./bashful server status’

I am not done tho~

#!/bin/bash

# Log file path

log_file=”/var/log/setup_script.log”

# Function to log messages

log_message() {

local timestamp=$(date +”%Y-%m-%d %H:%M:%S”)

echo “[$timestamp] $1” >> “$log_file”

}

# Function to generate a random password

generate_password() {

cat /dev/urandom | tr -dc ‘a-zA-Z0-9’ | fold -w 16 | head -n 1

}

# Function to retrieve the machine’s IP address

get_machine_ip() {

hostname -I | awk ‘{print $1}’

}

# Function to update A record using Cloudflare API

update_dns_cloudflare() {

local email=”<your_cloudflare_email>”

local api_key=”<your_cloudflare_api_key>”

local zone_id=”<your_cloudflare_zone_id>”

local domain=”<your_domain>”

local subdomain=”<your_subdomain>”

local machine_ip=$(get_machine_ip)

# Cloudflare API request

local response

response=$(curl -s -X PUT “https://api.cloudflare.com/client/v4/zones/$zone_id/dns_records” \

-H “X-Auth-Email: $email” \

-H “X-Auth-Key: $api_key” \

-H “Content-Type: application/json” \

–data ‘{“type”:”A”,”name”:”‘$subdomain.$domain’”,”content”:”‘$machine_ip’”,”ttl”:1,”proxied”:false}’)

# Check if the API request was successful

if [[ “$(echo “$response” | jq -r ‘.success’)” != “true” ]]; then

log_message “Error updating DNS records: $response”

exit 1

fi

log_message “DNS records updated successfully.”

}

# Function to setup the log file

setup_log_file() {

touch “$log_file” || { echo “Error creating log file.”; exit 1; }

log_message “Setup script started.”

}

# Function to execute tasks on the remote server

execute_remote_tasks() {

local remote_user=”$1″

local remote_address=”$2″

local db_username=”$3″

local db_password=”$4″

local email_username=”$5″

local email_password=”$6″

log_message “Executing tasks on the remote server.”

# SSH into the remote server

ssh “$remote_user@$remote_address” “

# Change to the desired directory

cd /path/to/remote/directory

# Additional tasks can be performed here

# …

# Example: Execute tasks using Bashful

./bashful website setup mywebsite &&

./bashful db setup mysql -u $db_username -p $db_password &&

./bashful email setup -e $email_username -p $email_password &&

./bashful webserver config nginx &&

./bashful server status

“

if [ $? -eq 0 ]; then

log_message “Tasks on the remote server completed successfully.”

else

log_message “Error executing tasks on the remote server.”

exit 1

fi

}

# Main function

main() {

setup_log_file

local db_username=”db_user_$(generate_password)”

local db_password=$(generate_password)

local email_username=”email_user_$(generate_password)”

local email_password=$(generate_password)

log_message “Starting the setup process.”

# Additional setup steps on the local machine can be added here

# …

update_dns_cloudflare

# Pivoting to the remote server

local remote_user=”<remote_user>”

local remote_address=”<remote_address>”

# Execute tasks on the remote server

execute_remote_tasks “$remote_user” “$remote_address” “$db_username” “$db_password” “$email_username” “$email_password”

echo “Autogenerated Credentials:”

echo “DB Username: $db_username”

echo “DB Password: $db_password”

echo “Email Username: $email_username”

echo “Email Password: $email_password”

log_message “Setup script completed successfully.”

}

# Run the main function

main

Needs a debug

I am trying to use a f# data pipeline approach with Elixier as a backendfor my tools

I would

Like a functional data pipeline for my processes

Whats f# doing

https://www.youtube.com/watch?v=mTPpL6LpWgg&list=PLWlesqsdrk8HuB-uvq0wwRdFecr_bFACG&index=39

https://www.youtube.com/watch?v=mTPpL6LpWgg&list=PLWlesqsdrk8HuB-uvq0wwRdFecr_bFACG&index=39

Society is a coral reef

And technology is our fish

Arch

Hyprland

Kitty

Micro

Mc

sshfs

fzf

exa

bat

Z/Oxide

(Ascillarium)

Sysdig

Fuzzy and sshfs will be a interesting combo

Bc i should be able to fuzzy search including remote directories with that if i am not mistaken

Sysdig

Neomutt

Like

We are not the first generation living, and that applies no matter which generation you are in.

In glinux programming its interesting

Bc the wordcomplexety relates to the tool age in a interesting manner

Its a chronological emergence point, which reflects in namespace choice

Within the general spiral of historical momentum

But these tools are also usual quite powerful, bc ppl have been working on them the longest

Like cd, or mv

I love mv, but its a odd friend

Like it can rename and move files

MV file1 file2

Renames it

MV file1 directoryx

Moves it there

I always forget it, and try to use mw for some reason

Like by its simple use but general applicability it is in value to the user a good tool, i would assume, if just a useful one, individual experiences may vary.

Like hyperland is amazing, but cd is cd

What would you do without cd

So neat, love it

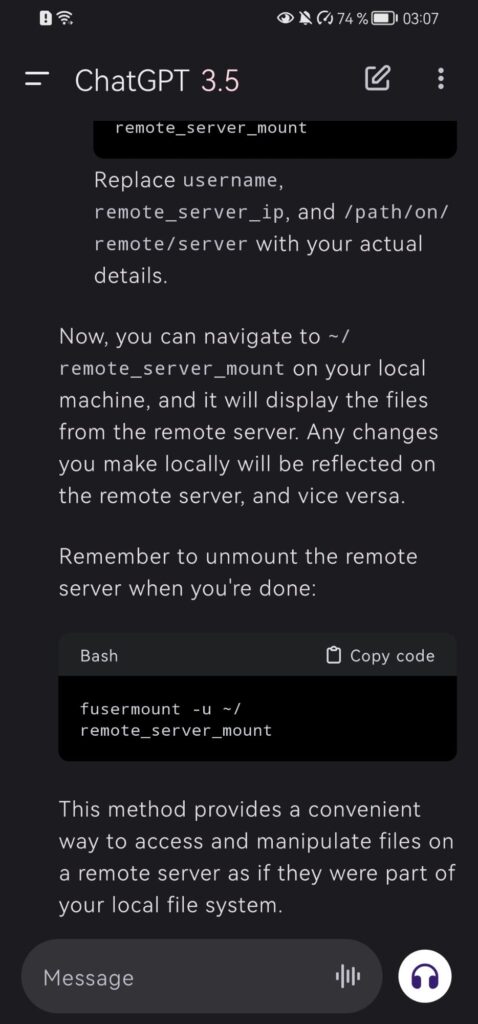

Remote server connection

Thats what i want

To mount a server like a folder

Now the planes are leveled

There is no distinction between my firewall and my nas or the websites

I got cloudflare workers for the rabitty domain

Thundervm for the fedi and wp projects

And my website is with my hoster

Like i needed to ftp flash wp, they literally just gave hosting

Bc i was looking to host the pages i was writing

Till i found wp and was happy withh that

Like there is a wp cli, but i had a feeling something like this was possible

That was such a obvious thing to want to do

Bc it is all working in a environment where things are much faster than your thinking

So all processes are itself only experienced threw the time they take to complete

But that also means, that there is a zero point of human limitations, where everything is just zero

The distance between 2 paths is zero, or your time to type and hit enter

Its like your path from home to work

But instead of a street its abstractions, like displaying text, meaning taking time to read

So by simply not doing them, there must be a singularity point where it takes no percieved time to do, making it instant

And thereby leveling out dimensionality and putting you into superposition over a node network

Its what probably requires root on its paths

Bc it is a mirroring metaphor for a key part of the human psyche, which mirrors existence itself

Like in a mercurial manner

I will see how it looks in practice tho^~^

I forgot fuzzy finder

Exists~

Like i wou

Ld like a seamless network traverse

Okai, if i am not mistaken

There is a default login on the switch

Like mine managed to connect on windows, but it cant find the web pannel

I will try to shh into the flat box with the default creds, hopefully that works

Then i can actually get into settings up that network

Then i need to get the other small server working

It has 2 eth connectors

I will try to use it as a firewall

Once the switch works i will try to connect it on the same network and then ssh into it

And the nas/media server

Btw i finally read the hyperland standard conf

Now the left mouse is moving a window, right, is dynamically resizing and same with keyboard, and stacking into the 3rd dimension, along with usual workspaces

Also the menu, and i added vim vixen to Firefox

It makes ir possible to use vim keys and comands, including : bar, but its a bit annoying

I would like direct keyboard shortcut, instead of the inbetween line call

I will try to use the extended keyboard, as a 1-x namespace,

Like the numpad

I just need to figure out how to alias that into the hyperland conf

Like i am trying to figure out if i can seemlessly connect a remote server as a folder

So i can press super shift 1 and it tunnels to another saved server

Like scp

But as a scd comand

Like ssh, but in seamless