Like

You can just put up a html form and let ppl join and pipe that into the processinput.sh

Like you you could spam that

But the idea is

Why would you spam it, you have been given the power to just xmchnage somewhere where that behavior is normal

And core

Shall output whatever it does as a log stream

So you can do data analysis on it

To stdout

Error and mon error are treated the same, as 1 datastream to analyze

You can dirrive bank statements to do bookkeeping

Or Analyse node network depth

Like as a prototype

You still have the admin passing judgment here

They can blacklist ppl

Which we dont want

Like its allowed

Like we let ppl experiment with it and see what crystallizes out of the process

And if we see a stable montheism

We use that, or both, we’ll see

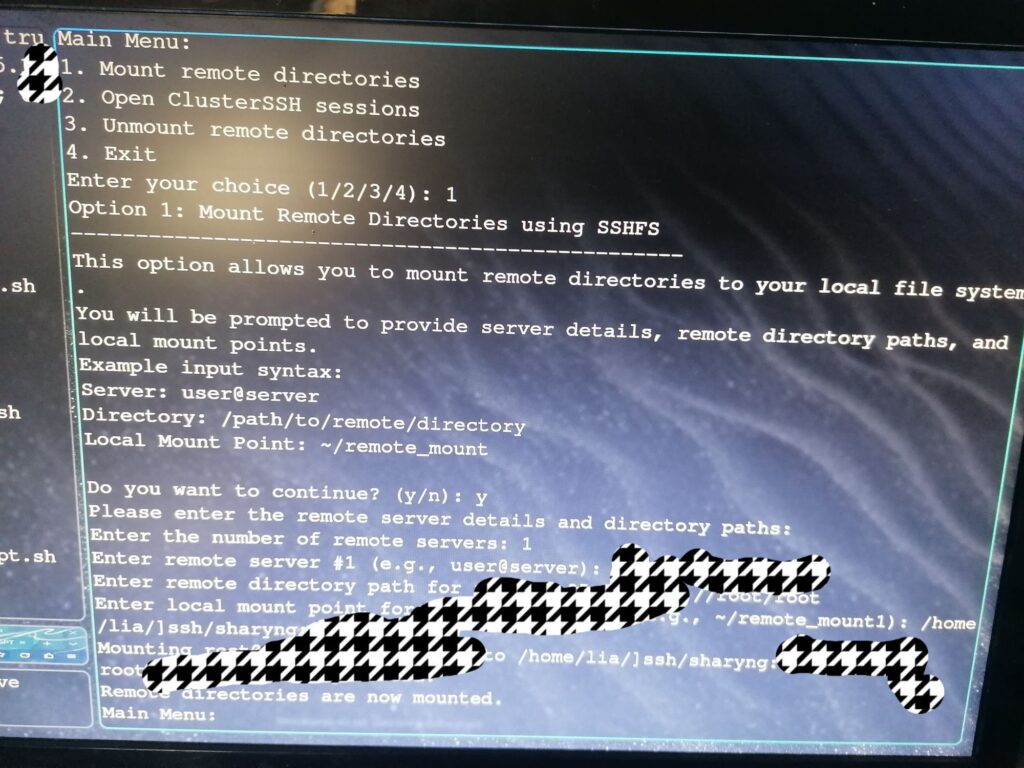

Yes, you can run it live

But running live we dont want on a server, bc there are admins

Its a interface for admins to test, and then we see what worked for them

Best case we go with something like veilid or a chain

Bc then we can avoid the admins

And let individuals interact indipendently

You can run a company pay structure or just share, but both are just a network switch away

Like this

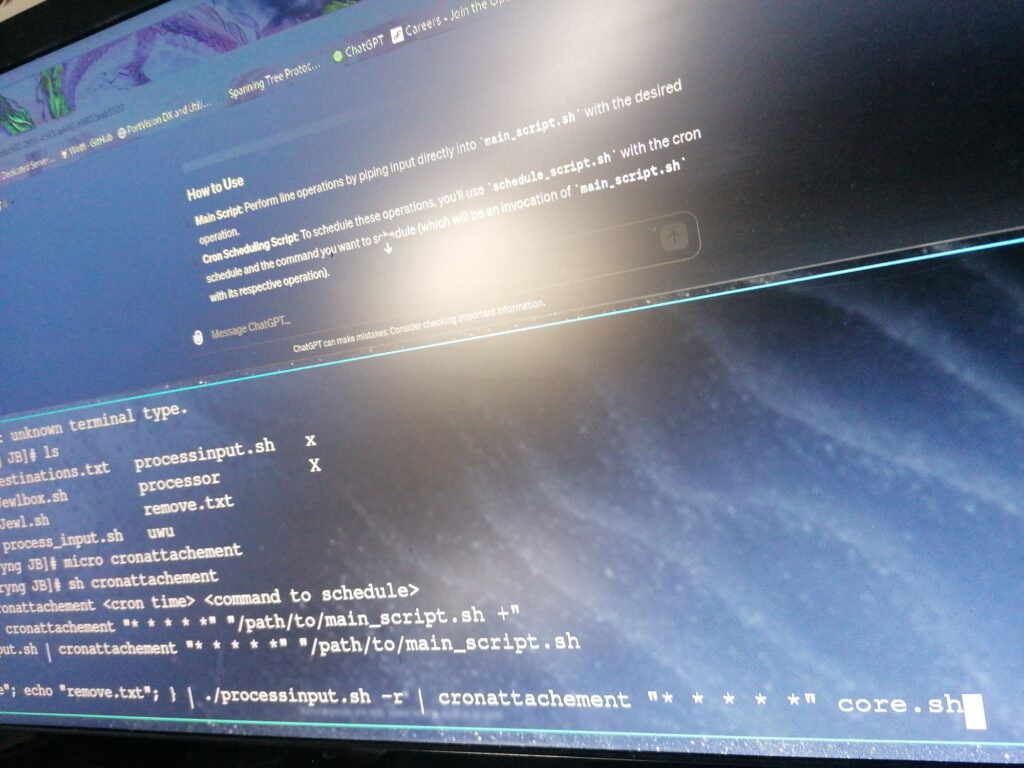

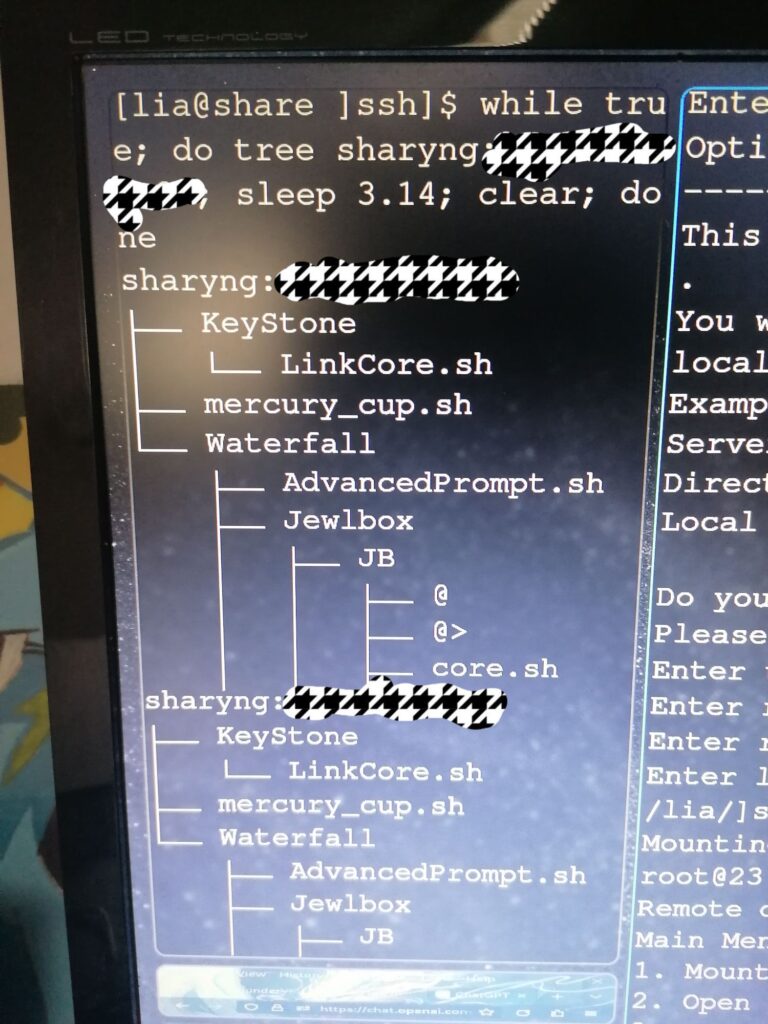

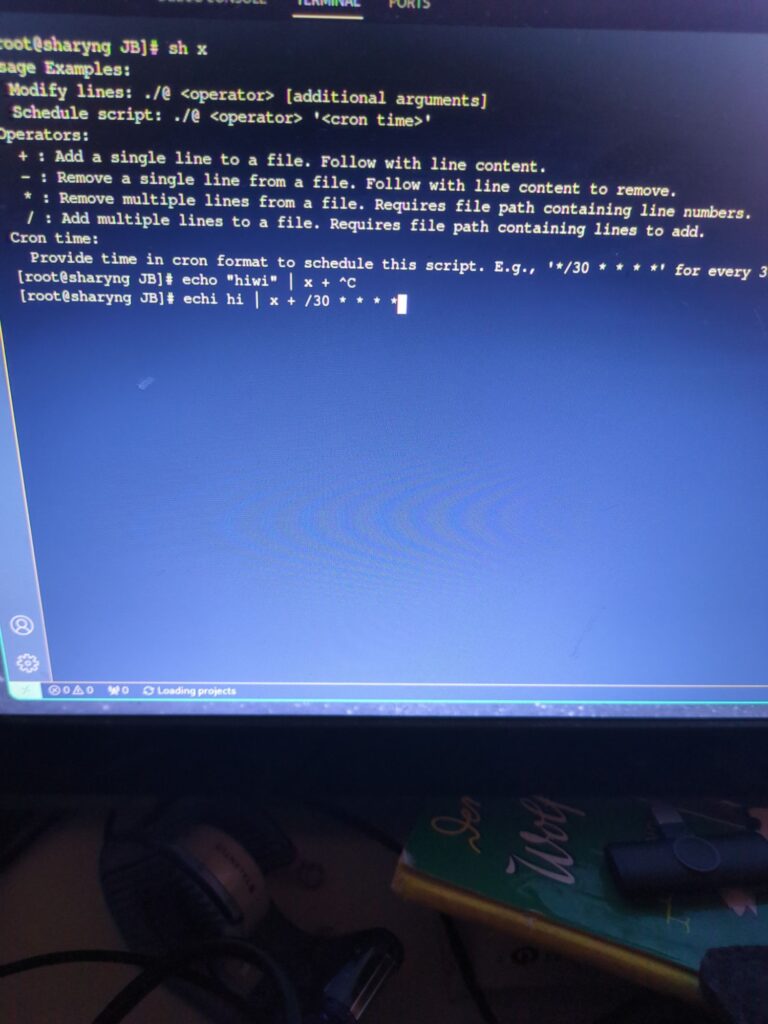

It detects if you give it a file or echo, and accordingly adds the lines to the datastream

Removes them with the – r flag, inverting the function of the tool

And then you pipe it into the cron modifyer

Which makes it a file and then scedules that file with he given time

Like cron is not complete yet

In this case, the core code

Or with my naming

If i saw correctly, you can use multiple files, but

You can feed it a entire directory

To test the modified script, let’s assume you’ve saved it as process_stream.sh and made it executable with chmod +x process_stream.sh. We’ll create a simple pipeline that demonstrates both adding to and removing from the data stream using the -r flag.

Scenario:

- Adding to the Data Stream:

- We’ll echo several lines of text and a filename that contains specific lines we want to add to our data stream.

- Removing from the Data Stream:

- We’ll use the

-rflag to remove certain lines from our data stream, including lines from a file.

Setup:

- Create two files,

add.txtandremove.txt, for the demonstration. add.txtcontains:

Line to add 1

Line to add 2remove.txtcontains:

Line to removeTest Pipeline:

- Adding Lines and File Contents:

First, let’s add lines directly and from add.txt:

{ echo "Line to add 1"; echo "Line to add 2"; echo "add.txt"; } | ./process_stream.shThis command simulates adding lines to your data stream. Since it’s the initial setup, it will just output the contents of add.txt along with the echoed lines.

- Removing Lines and File Contents:

Next, to demonstrate removing lines, including those from remove.txt, we’ll append the removal operation in another call to the script:

{ echo "Line to remove"; echo "remove.txt"; } | ./process_stream.sh -rFor this to work as intended in a single pipeline where you see the effect of both adding and removing operations sequentially, you’d actually need to chain these operations in a way that reflects a real data stream manipulation. However, given the script’s current design, it treats each execution independently, starting with an empty data stream.

To effectively demonstrate both in a scripted, single execution context, consider the following hypothetical example that assumes a continuous stream or modifications to the script to support persistent state:

# Hypothetical, assumes persistent state or chained operations

{ echo "Initial line"; echo "add.txt"; echo "Line to remove" | ./process_stream.sh; echo "remove.txt" | ./process_stream.sh -r; }This setup doesn’t directly work as shown due to the stateless nature of shell scripts and their execution environment. The script processes input as a new instance each time it’s called, without memory of previous calls. Therefore, to truly test adding and then removing, you’d need a more complex setup or a script modification to maintain state across calls, such as using temporary files or modifying the script to handle continuous input in a single execution more dynamically.

#!/bin/bash

# Initialize an empty array to hold the data stream

declare -a data_stream

# Function to add input to the data stream

add_to_stream() {

data_stream+=("$1")

}

# Function to remove input from the data stream

remove_from_stream() {

# Temporarily store the data stream in another array to avoid modification issues during iteration

local temp_stream=("${data_stream[@]}")

data_stream=() # Clear the original data stream

# Iterate over the temporary stream

for line in "${temp_stream[@]}"; do

# Only add back lines that do not match the input

if [[ "$line" != "$1" ]]; then

data_stream+=("$line")

fi

done

}

# Function to process each input line

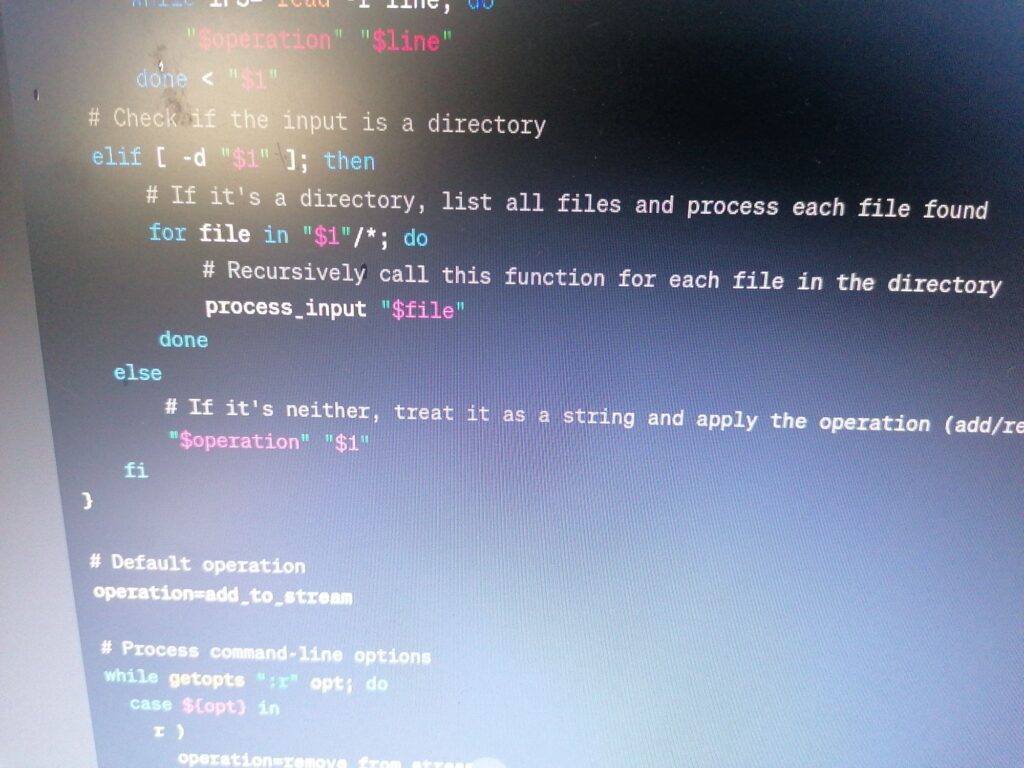

process_input() {

# Check if the input is a file

if [ -f "$1" ]; then

while IFS= read -r line; do

"$operation" "$line"

done < "$1"

# Check if the input is a directory

elif [ -d "$1" ]; then

# If it's a directory, list all files and process each file found

for file in "$1"/*; do

# Recursively call this function for each file in the directory

process_input "$file"

done

else

# If it's neither, treat it as a string and apply the operation (add/remove)

"$operation" "$1"

fi

}

# Default operation

operation=add_to_stream

# Process command-line options

while getopts ":r" opt; do

case ${opt} in

r )

operation=remove_from_stream

;;

\? )

echo "Invalid option: $OPTARG" 1>&2

exit 1

;;

esac

done

shift $((OPTIND -1))

# Read from STDIN line by line if no arguments are provided

if [ "$#" -eq 0 ]; then

while IFS= read -r line; do

process_input "$line"

done

else

# If the script receives arguments directly, process each argument

for arg in "$@"; do

process_input "$arg"

done

fi

# Output the final data stream

printf "%s\n" "${data_stream[@]}"

nod debugged

how to make it use negation makes me break my mind tho

bc

In practice, the script can be used in a Unix pipeline like so:

bash

echo “path/to/file.txt” | ./process_input.sh | some_other_command

or for direct string processing:

bash

echo “Some text” | ./process_input.sh | some_other_command

Here's how you might set up your pipeline:

bash

{ echo "Direct input line"; echo "data/file1.txt"; echo "data/file2.txt"; } | ./process_input.sh | sort | uniq -c

Example Output:

1 Direct input line

1 First file, line 1

1 First file, line 2

1 Second file, line 1

1 Second file, line 2

#!/bin/bash

# Function to process each input line

process_input() {

# Check if the input is a file

if [ -f "$1" ]; then

# If it's a file, output its contents

cat "$1"

# Check if the input is a directory

elif [ -d "$1" ]; then

# If it's a directory, list all files and process each file found

for file in "$1"/*; do

# Recursively call this function for each file in the directory

process_input "$file"

done

else

# If it's neither, treat it as a string and simply output it

echo "$1"

fi

}

# Read from STDIN line by line

while IFS= read -r line; do

process_input "$line"

done

# If the script receives arguments directly, process each argument

if [ "$#" -gt 0 ]; then

for arg in "$@"; do

process_input "$arg"

done

fi

Aaah i see

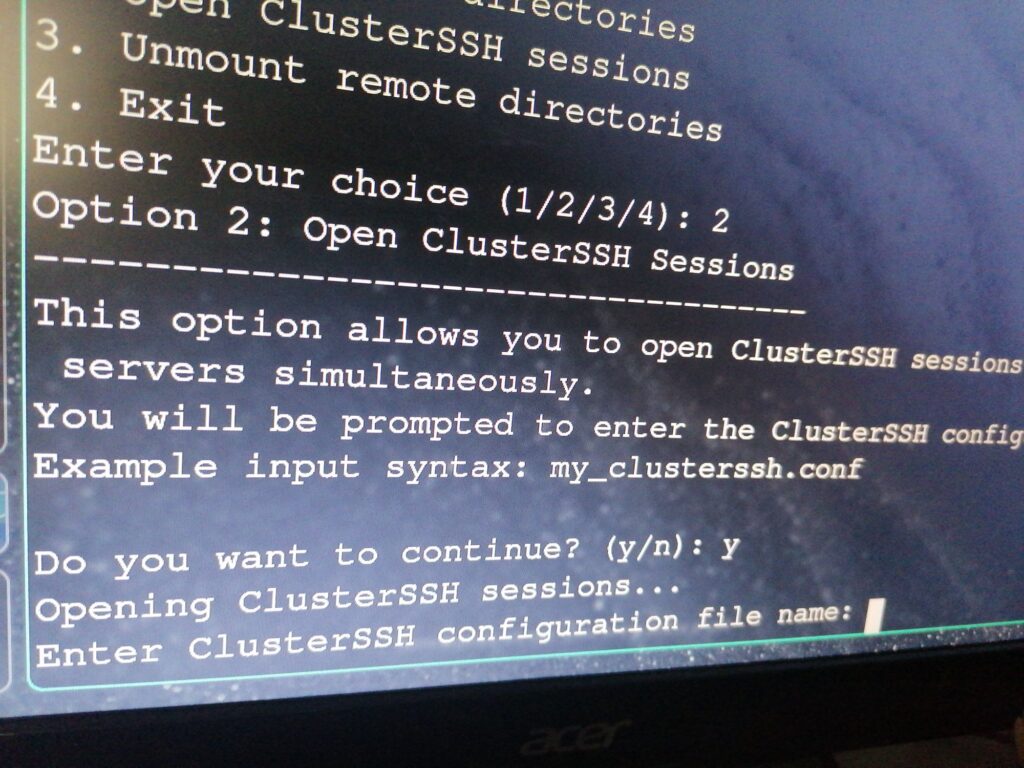

It only works when the ssh session is constantly running in the bg

How i make this permanent

Neat

I will see about how to do that

I like it

Comfy

Would like inside fluff tho

Like there are cover fabrics for those

I will wash my hair out with tea

It removes all to hair stuff you put in them

Like I love the fabric

Its really techy for the amount of cotton in it

I like it

Hood is big enough to hide in social situations

Terence McKenna – money is obsolete (14)

https://youtube.com/watch?v=-XJKiFV68FY&si=519QXF1J4Vh-sjHz

Noo, measuring by the kid means, we give the biggest tools to the youngest ppl

Bc existence is a abstraction

Against time, called decay/ death

Its anti Entropic

If entropy is growth/multiplying of structure and pattern

Then value is a negation of that

Its time defined by the events of decay within it

, mostly bound into objects

Like its probably more complex

But i feel like thats as existing or not as you can get

Zero is still a strange friend

Like its

That

Its value, including potential value

Its a probability curve with multiple segmented vectors

Giving 1 negation of time called value

Which we trade for other time or objects, measured by their time and potential impact on time

Like even Beauty

You got the lamp bc its pretty

Is sense and therefore time bound

Maybe you get sad when you cant see it anymore bc your roommate moved it

The potential value is a measure of longing

The core value is a measure of time

Thats also why we can trade value for time

Bc its a negation of it

But its not a linear connection, or has to be

But then you get into social variable density, never mind

Like yes is that, but currently dont see a usecase yet~

But cool

I feel like

Value is a inverse of time

That’s why it is upside down with money

I will parce out the cron into a separate script,that can be piped

And then,

Collapse the +-/* interface

Into + /

And a -r flag

Meaning, the mirror image of a negative number line can be folded back into the positive one and then just mirror with a -reverse flag

Basically

A b

Reverse

-a -b

So you operate with

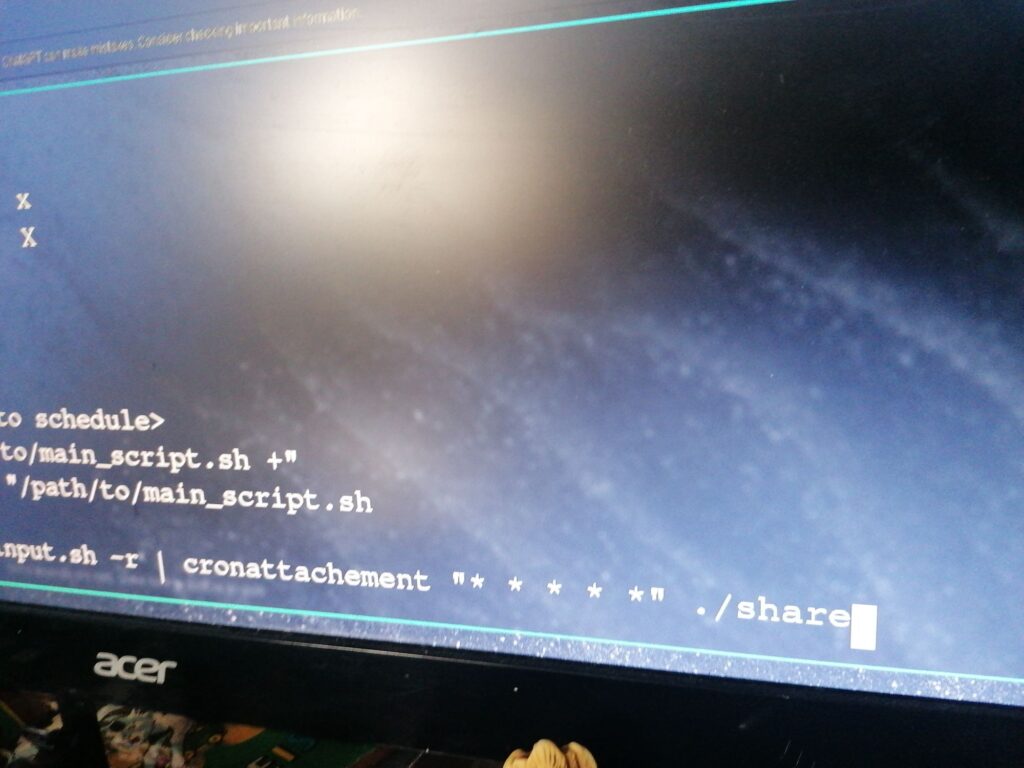

Echo “Filepath” |@

Which automatically adds the list

Echo”address” which automatically adds a single address

Echo “addr” | @

And reverse flag -r

E”addr” |@ -r

Removes it

Like i can probably make it so you just pipe the output of whatever command gives you the addresses and

You pipe that into | @ | cron

And you can easily set up a structure in which everyone automatically gets payed and when they get played

You can then share money between ppl without any work at all

If I make it use the sys users as a db for monero adresses

Then I don’t even need to pipe it

It automatically distributes to every user on the system

Wtf how high was I

I will let it populate payment addresses from user db;)

No flags no comands

It just runs and distributes money

Its a button click

Huh

Interesting

I may take a small break~

It will auto cron itself based on user input and then just be already done and setup

Actually

I need to command to do easy script interactions

But like first

Automatic setup and running after user in

And then with the command you can change the operational things,via the user making and deleting process

Like I may adapt my simpler wrapper onto that two

And then I have 1 node sitting processing transactions

As soon as that node is up

Ppl can donate to the project and everyone on the team gets money if something gets donated

Without anyone doing anything to facilitate that

Besides a server being up

filename can be changed as alias

You can change space in terms of network size and time in terms of cron sceduling

as

@ +*/ Crontime

piping a path should autodetect / as its function without providing the flag

and a string as + function

but doesnt seem to be currently working to detect, maybe me

maybe it now always needs a cron which would be a pain

but yes, manipulates space/structure and time in 1 command

as soon as i debug core,,,

nah, permisssion denied on ./@, something is off

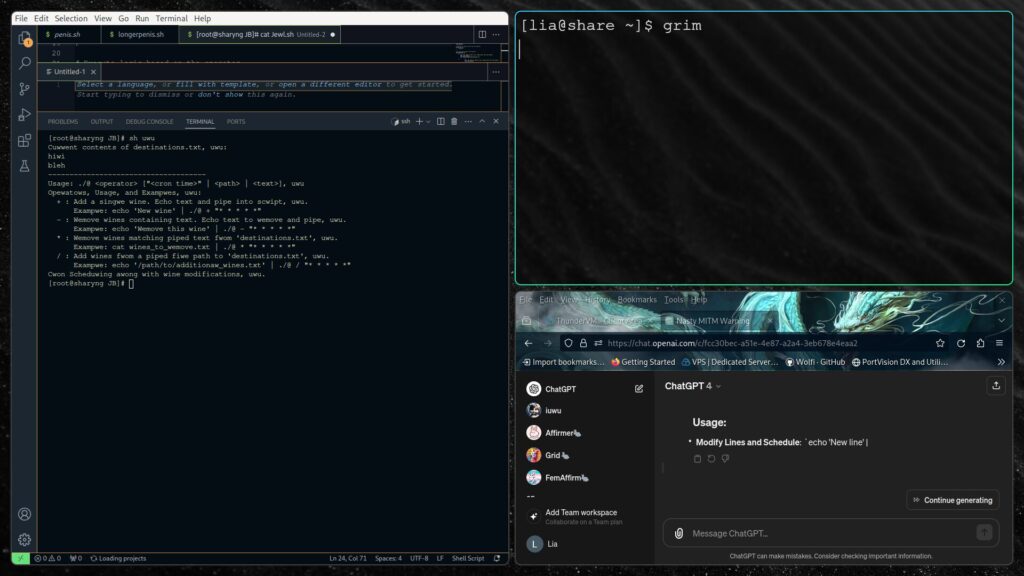

but frontend exists , backend needs a debug, it speaks uwu

and breaks your mind to comprehend

i like it<3

like if you color the printed log list by how often a adress is used ,

, , i nini now..~

#!/bin/bash

# Define the script path (adjust to your script's actual path, uwu)

SCRIPT_PATH="/path/to/@"

# Display the cuwwent contents of destinations.txt at the stawt, uwu

echo "Cuwwent contents of destinations.txt, uwu:"

cat destinations.txt

echo "-------------------------------------"

# Dispway usage exampwes and expwain featuwes, uwu

print_usage() {

echo "Usage: ./@ <operator> [\"<cron time>\" | <path> | <text>], uwu"

echo "Opewatows, Usage, and Exampwes, uwu:"

echo " + : Add a singwe wine. Echo text and pipe into scwipt, uwu."

echo " Exampwe: echo 'New wine' | ./@ + \"* * * * *\""

echo " - : Wemove wines containing text. Echo text to wemove and pipe, uwu."

echo " Exampwe: echo 'Wemove this wine' | ./@ - \"* * * * *\""

echo " * : Wemove wines matching piped text fwom 'destinations.txt', uwu."

echo " Exampwe: cat wines_to_wemove.txt | ./@ * \"* * * * *\""

echo " / : Add wines fwom a piped fiwe path to 'destinations.txt', uwu."

echo " Exampwe: echo '/path/to/additionaw_wines.txt' | ./@ / \"* * * * *\""

echo "Cwon Scheduwing awong with wine modifications, uwu."

}

# Ensure an opewatow is pwovided

if [[ $# -lt 2 ]]; then

print_usage

exit 1

fi

operator=$1

cron_schedule=$2

shift 2 # Pwepawe additional arguments fow use

# Validate the opewatow

if ! [[ "$operator" =~ ^[\+\-\*/]$ ]]; then

echo "Invawid opewatow: $operator, uwu"

print_usage

exit 1

fi

# Wead piped input if pwesent, othewwise set to empty, uwu

piped_input=$(cat)

# Function to update cwon job fow this scwipt, uwu

schedule_with_cron() {

(crontab -l 2>/dev/null | grep -v "$SCRIPT_PATH"; echo "$cron_schedule $SCRIPT_PATH $operator") | crontab -

echo "Scwipt scheduled with cwon: $cron_schedule, uwu"

}

# Main wogic fow opewatow actions

case $operator in

+)

content="${@:-$piped_input}"

echo "$content" >> destinations.txt

;;

-)

content="${@:-$piped_input}"

sed -i "/$content/d" destinations.txt

;;

\*)

[[ -n "$piped_input" ]] && echo "$piped_input" > /tmp/@_temp.txt || touch /tmp/@_temp.txt

while IFS= read -r line; do sed -i "/$line/d" destinations.txt; done < /tmp/@_temp.txt

rm /tmp/@_temp.txt

;;

/)

[[ -n "$piped_input" ]] && { echo "$piped_input" >> destinations.txt; } || echo "No piped content weceived, uwu."

;;

esac

# Scheduwe with cwon if a scheduwe is pwovided, uwu

[[ -n "$cron_schedule" ]] && schedule_with_cron

# Dispway the updated contents of destinations.txt, uwu

echo "Updated contents of destinations.txt, uwu:"

cat destinations.txt

echo "-------------------------------------"

It crons core

And then uses sting and list manipulations to change the data amount

Now I need to make it let you choose the cron interval

It is aliased as @

With +-/* modifier flags

Whatever you pipe into it,gets piped to the next script in the pipe

It should

Admin is

Bc we want ppl to experiment with it

We need a human interface

But yes you can just bot that

not debugged

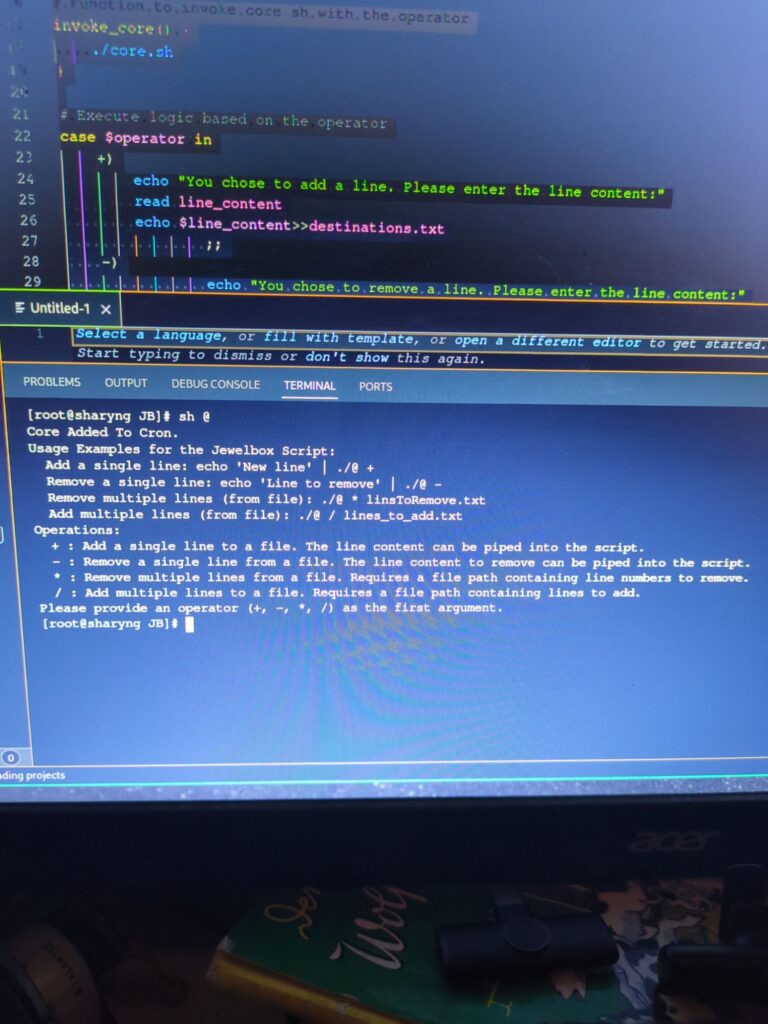

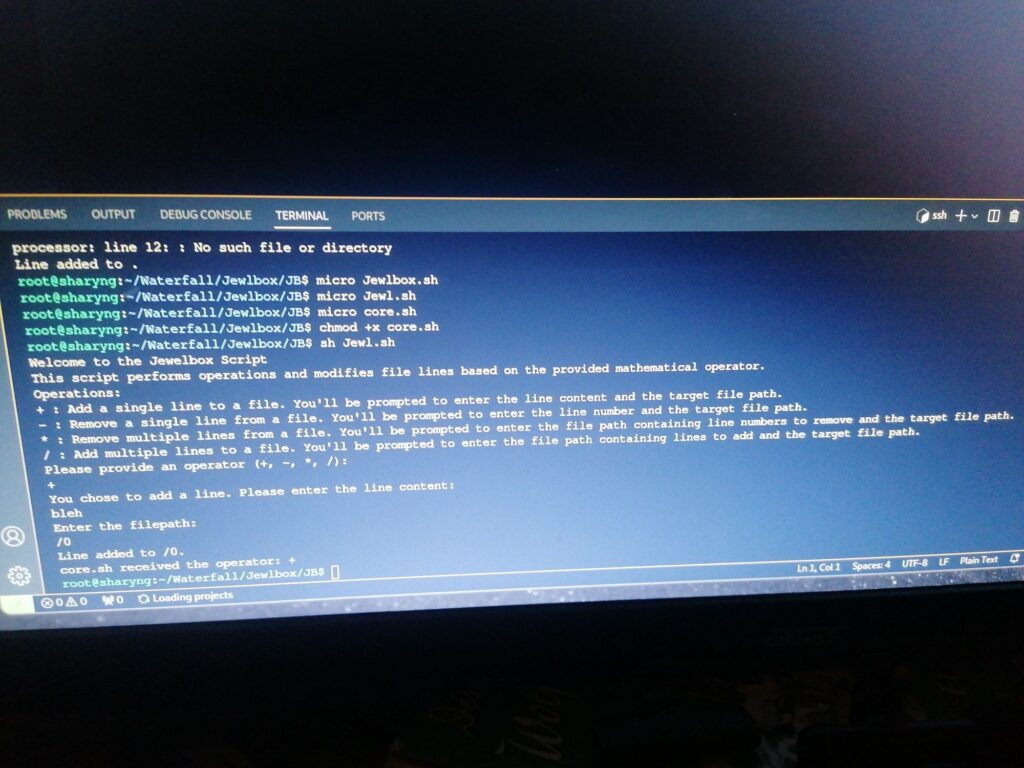

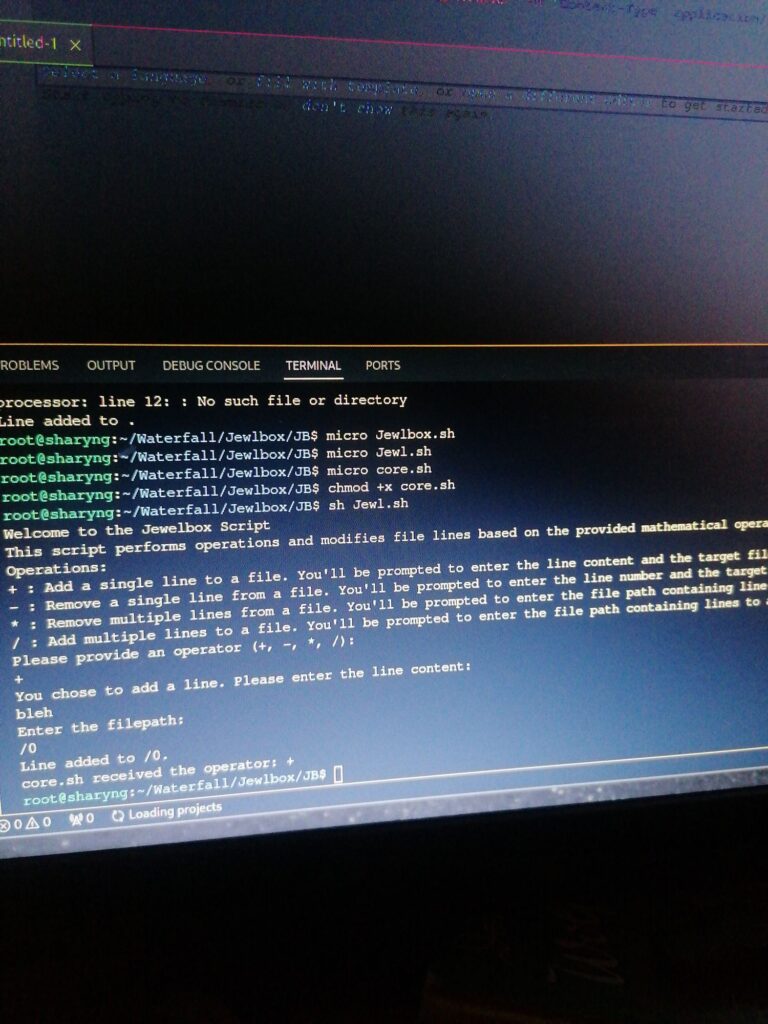

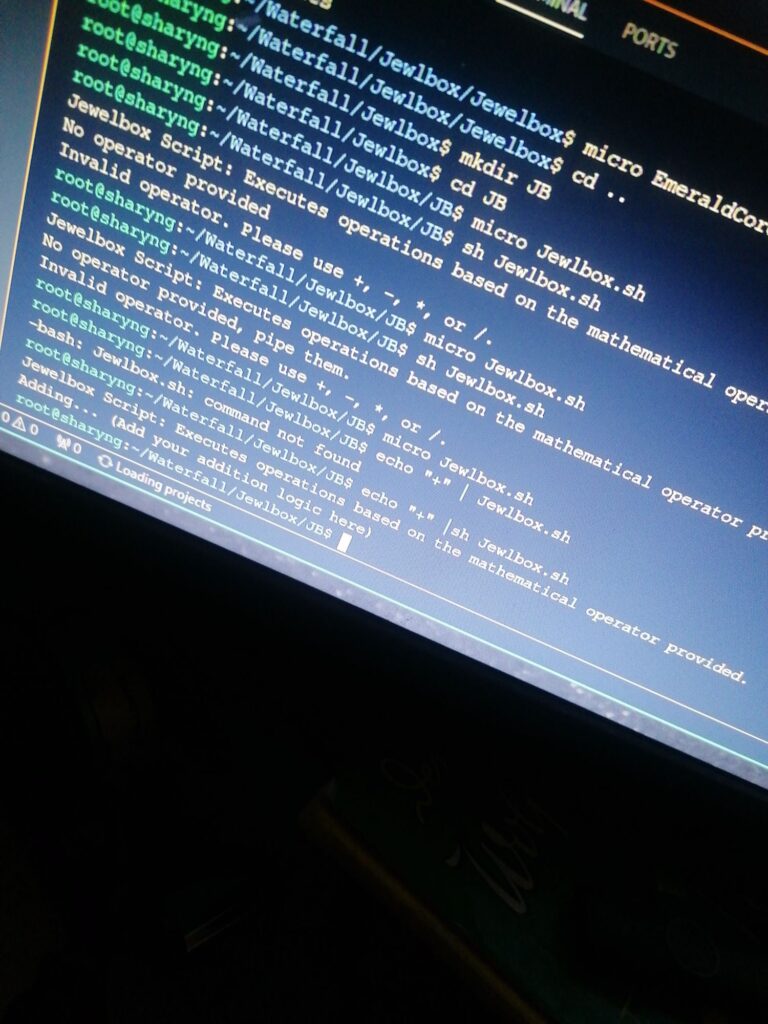

[root@sharyng JB]# cat Jewl.sh

#!/bin/bash

echo "Welcome to the Jewelbox Script"

echo "This script performs operations and modifies file lines based on the provided mathematical operator."

echo "Operations:"

echo "+ : Add a single line to a file. You'll be prompted to enter the line content and the target file path."

echo "- : Remove a single line from a file. You'll be prompted to enter the line number and the target file path."

echo "* : Remove multiple lines from a file. You'll be prompted to enter the file path containing line numbers to remove and the target file path."

echo "/ : Add multiple lines to a file. You'll be prompted to enter the file path containing lines to add and the target file path."

echo "Please provide an operator (+, -, *, /):"

# Directly read the operator from stdin

read operator

# Function to invoke core.sh with the operator

invoke_core() {

./core.sh

}

# Execute logic based on the operator

case $operator in

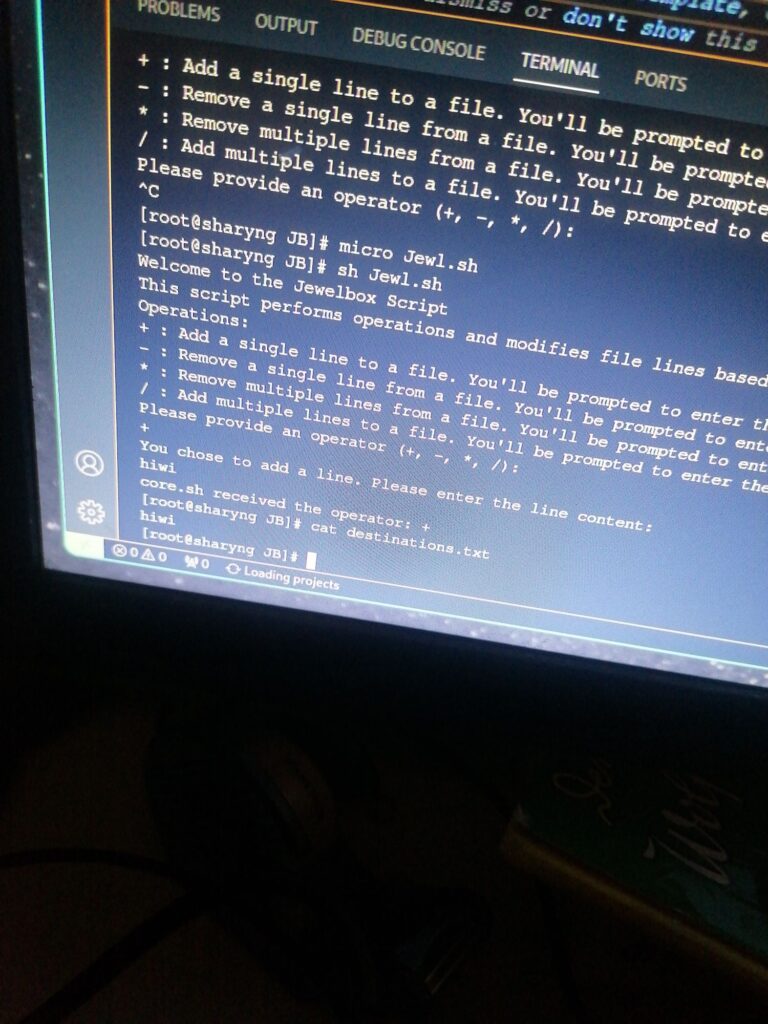

+)

echo "You chose to add a line. Please enter the line content:"

read line_content

echo $line_content>>destinations.txt

;;

-)

echo "You chose to remove a line. Please enter the line content:"

read line_content

sed -i "/$line_content/d" "sed -i ~/destinations.txt"

;;

\*)

echo "You chose to remove multiple lines. Please enter the filepath containing line numbers to remove (one per line):"

read line_numbers_filepath

echo "Enter the target filepath to modify:"

read filepath

mv filepath /destinations.txt

while IFS= read -r line_number; do

sed -i "${line_number}d" "$filepath"

done < "$line_numbers_filepath"

echo "Lines removed from $filepath."

;;

/)

echo "You chose to add multiple lines. Please enter the filepath containing lines to add (one per line):"

read lines_to_add_filepath

echo "Enter the target filepath to modify:"

read filepath

mv filepath /destinations.txt

cat "$lines_to_add_filepath" >> "$filepath"

echo "Lines added to $filepath."

;;

*)

echo "Invalid operator. Please use +, -, *, or /."

exit 1

;;

esac

# Invoke core.sh with the operator after performing the action

invoke_core

Like it lets you add and remove lines on your admin list

And then executes core by piping the same opperator into it

Like i can do Normal pipe flags using math opperstors

The only thing it does is send to all destinations in the list

1 function applyed for different usecases

Bc time

Each cycle of uptime is a on and off switch

If you had 10 wallets and removed 2 for the next cycle, you got 8, bc 8 were in this temporally newer transaction target list

You removed with the same action you added

Basically a input impuls for change and then a confirmation

Now to combine that with the code

Like its illusive, i dont actually know how much of the system i see is just a reflection of itself