Salad Savior – 百鬼֎夜行 【Liminality公募最優秀賞】【公式音源】

https://youtu.be/fXWLVOLcfjA

Secret Kinks of Elementary Functions

https://youtu.be/92wXQYcYLMg

I am gonna mal

Ke

They are easy enough and easy to share

Harmony

https://youtu.be/WFXFu_uppaM

vata analysis not dbggd

https://chat.openai.com/share/b90deee5-971c-4d2f-bd5b-c9c5ff2b47be

Given the constraints of this environment where direct execution of Python scripts with dynamic external inputs is not feasible, I'll simulate what the standard output (stdout) of the script would look like when running with the example sequence provided in the `read_sequence_from_input()` function.

Assuming the sequence is `[1, 0, 1, 1, 0, 1, 0, 0, 1, 1]` and using the placeholder values for autocorrelation, runs test Z-score, and entropy from the script, here's how the stdout would appear:

```

Original Data Stream: ■□■■□■□□■■

Autocorrelation (Lag 1): 0.3 ■■■□□□□□□□

Runs Test Z-Score: 0.82 ■■■■■□□□□□

Entropy: 0.6931471805599453 ■■■■■■■■■□

```

This output begins with the "Original Data Stream" line, visually depicting the input sequence using filled (■) and not filled (□) cubes for `True` (1) and `False` (0) values, respectively. It then presents each calculated metric—autocorrelation, runs test Z-score, and entropy—alongside a visual representation scaled to a maximum of 10 cubes. The number of filled cubes corresponds proportionally to the metric's value relative to its maximum possible value or significance threshold, providing an intuitive visualization of the sequence's characteristics and the results of the analyses.Piping data into a Python script typically involves reading from standard input (stdin), allowing the script to process data passed to it via a command-line pipeline. To adapt the example Python script to accept piped input, you would modify the `read_sequence_from_input()` function to read from stdin instead of a predefined array. Below is an adapted version of the script that reads a sequence of `1`s and `0`s from stdin, interprets them as boolean values, and performs the described analyses.

### Adapted Python Script for Piping

```python

import sys

import numpy as np

from scipy.stats import entropy

def read_sequence_from_stdin():

# Reads a line from stdin and converts it into a numpy array of integers (0s and 1s)

try:

input_sequence = input().strip() # Reads input from stdin

sequence = np.array([int(x) for x in input_sequence.split() if x in ['0', '1']])

return sequence

except ValueError:

print("Input sequence must consist of 0s and 1s separated by spaces.")

sys.exit(1)

def calculate_entropy(sequence):

_, counts = np.unique(sequence, return_counts=True)

probabilities = counts / len(sequence)

return entropy(probabilities, base=2)

# Placeholder functions for calculate_autocorrelation and calculate_runs_test_z_score

# Implement these functions based on your specific requirements

def generate_visualization(value, max_value, total_cubes=10):

filled_cubes = round((value / max_value) * total_cubes)

return '■' * filled_cubes + '□' * (total_cubes - filled_cubes)

def main():

sequence = read_sequence_from_stdin()

# Placeholder values for demonstration

autocorrelation = 0.3 # Implement actual calculation

runs_test_z_score = 0.82 # Implement actual calculation

sequence_entropy = calculate_entropy(sequence)

original_stream_visual = ''.join(['■' if x == 1 else '□' for x in sequence])

autocorrelation_visual = generate_visualization(abs(autocorrelation), 1)

runs_test_z_visual = generate_visualization(runs_test_z_score, 1.96)

entropy_visual = generate_visualization(sequence_entropy, 1) # Assuming max entropy value as 1 for binary

print("Original Data Stream:", original_stream_visual)

print("Autocorrelation (Lag 1):", autocorrelation, autocorrelation_visual)

print("Runs Test Z-Score:", runs_test_z_score, runs_test_z_visual)

print("Entropy:", sequence_entropy, entropy_visual)

if __name__ == "__main__":

main()

```

### How to Pipe Data to This Script

1. **Prepare your data:** Make sure your data is in a format the script expects. For this script, your data should be a sequence of `1`s and `0`s separated by spaces (e.g., `1 0 1 1 0 1`).

2. **Run the script:** Save the script to a file, for example, `data_analysis.py`. Then, you can pipe data into it using a command line. Here's how you might echo a sequence into the script:

```bash

echo "1 0 1 1 0 1 0 0 1 1" | python data_analysis.py

```

3. **View the output:** The script reads the piped input, performs the analyses, and outputs the results and their visual representations to stdout.

This approach enables the flexible use of the script with various data sources, allowing for dynamic data analysis and visualization through command-line pipelines.Of The Trees & Barclay Crenshaw – Blue Mile Ft. Strategy

https://youtube.com/watch?v=9dAHvgMUy7w&si=i_oXKqY10esSpO9e

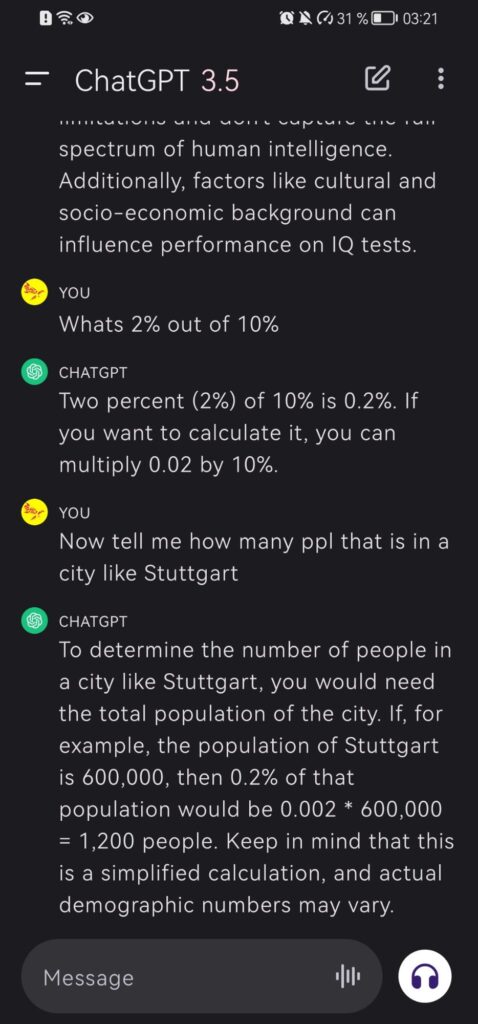

My math was off

And it still doesnt matter the message is the same

Like

It also confuses mine

That i just like chill with everyone that comes along

Yes, i am trying to be social with bad statistical odds

If i start filtering i can stay in bed instead of socializing

Till a person i am looking for comes by, in like 10 years

Mine has already assimilated the pattern of me miauing at things i want

I love it

We have over complicated ever gift of the gods

Like thing is

I dont get the refferences of my age group here

I havent seen the meme

About farmers protesting or whatever

Like i get posy

But i have a feeling that posy may be in a simular situation

Like its probably a statical thing

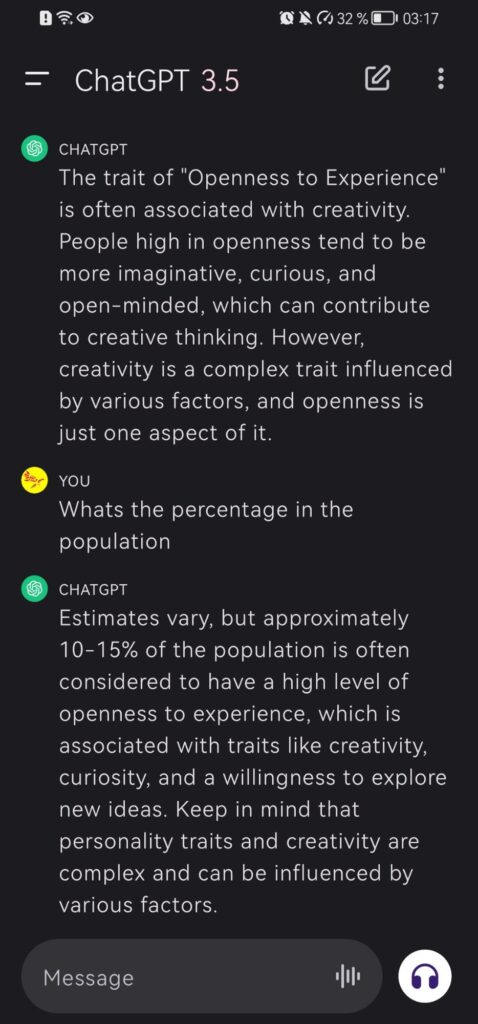

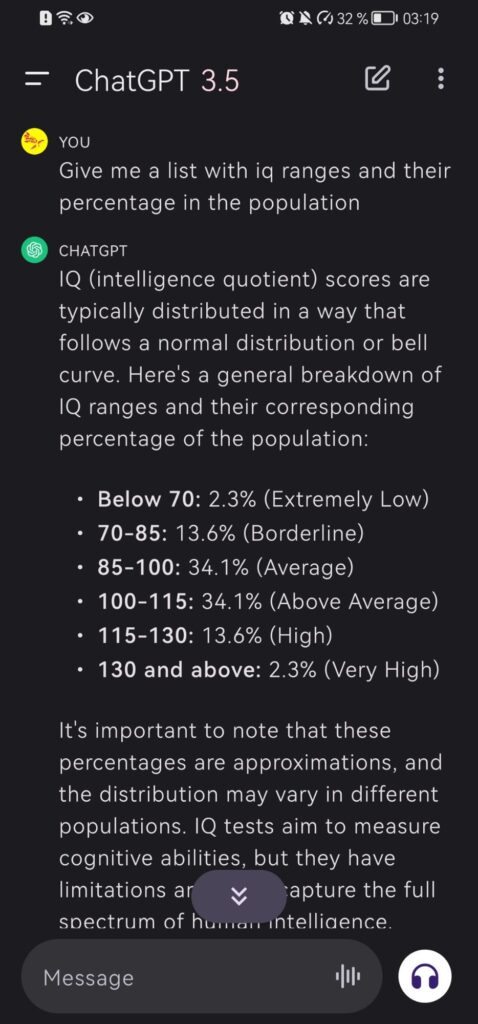

And now you add intelligence distribution

Last category

So the ppl i vibe with are

2% out of 10%

Add that i should look for female friends bc i am a female now and we have the same interests

Like the science gets fluffy there

But you get the idea

Me meeting someone i vibe with is statistically unlikely

Like life is more than that, but it makes beeing understood unlikely

Like i have long settled with that

But i feel like its confusing mine

Like they noticed i have the ability to manage social environments and i actually get a not low status position in hyrarchys of competence

Why am i not socializing

Bc 90 %++ of ppl i have no interest in

, not saying i am mean or thinking less

Just that some ppl. Like apples and some oranges

I am happy if mine has a good conversation

But i dont expect normal ppl to wanna talk about system axioms and cultural myth cycles

Like didn’t that same news cycle everyone is talking about play out the same some months ago

Like i would be demistifyng the peer group in a sentence

Which they don’t like

Like i am waiting for someone to say something actually interesting

Like the current news have for me the same value as someone miauing at me

The miau is shorter and more insightful

Bc you can read the tonal inflections from it

But like there are obviously more ppl talking about the news

Like i am social

I just rarely have a reason to be social

Like i dont want mine to worry tho~

Like i will try to make a cake and get it to her mum, she already gave us the 2nd cake

Like they so yummy

But i should send one back already ~

Btwfound

2 keys

1 is bread

And the other is the mania

Like i got that message about that person in bad circumstances

And the family person i got it from

My first mental impuls was

Oh no what have i done wrong, this time

Like childhood related

Like i feel like thats the issue

I see something and take the blame for it, but then discard the idea

But the connection between me and whatever i associate still remains

And sometimes it gets completely discarded and sometimes not, sometimes in parts

Like unconsciously but with everything i interact with

Like mine sometimes does that, like jokingly get louder

And ill always look at them confused

And ask them what i have done wrong

Like everyone else trying that i would have bitten and buried

But its mine

Like you can be enforcing without beeing loud

Bread,

I figure out why i just kept the money and first thing gave mine some, instead of splitting 50/50

Me and mine ate the soup thing with meat

And we had cut slices, but still arranged as a whole loaf

And i just grabbed 1 side of half a bread in pcs

Not noticing i had taken a pce which was the end which wasn’t even cut

Like my answer was

My brain didn’t get that far, i just grabbed 1 side

I feel like thats the underlying issue with that in general

Btw mine is good with all the details i ignore

I love

Her bc no one likes me anyways

I am insecure

I love cuddling with her

I love sitting arround eating snacks we shouldn’t eat

Like someone was like drunk and really loud

And she put me behind herself and i hid behind her

i felt save

She has a amazing intuition for social behavior and reading me

She randomly carries me arround

Like why i love her, what a silly question

Why wouldn’t i

And she feeds me great food!

Her cooking is good

She a furry and trans

Like she mine

And she can tolerate me

Best household i ever had

Best girl

So

We are here

The fedi server does all the feedback

New features is intigrated via the ability to add your own scripts to the pipe editor

Adding new plugins

Make maps of whats going on

Yeah maintenance and monitoring till the next stage

Obv

Not debugged

*pees diapy*

https://chat.openai.com/share/a59ae788-06a1-46d8-8170-3697d2b9cc6e

Here's an example of what the CLI output might look like, including the data board with source and destination script information:

```

Summary of Script Connections:

Script: script1.py, Complexity: Low

Connections:

- script2.py: Low

- script3.py: Medium

Script: script2.py, Complexity: Medium

Connections:

- script1.py: Medium

- script3.py: Low

Script: script3.py, Complexity: High

Connections:

- script1.py: Medium

- script2.py: High

Data Flow Summary:

Script: script1.py, Complexity: Low

Output Data: ['data1', 'data2'] --> script2.py

Script: script2.py, Complexity: Medium

Input Data: ['data1', 'data2'] <-- script1.py

Output Data: ['processed_data1', 'processed_data2'] --> script3.py

Script: script3.py, Complexity: High

Input Data: ['processed_data1', 'processed_data2'] <-- script2.py

Output Data: ['final_result']

Data Board:

Timestamp: 1646782561.540615, Source Script: script1.py, Destination Script: script2.py, Data Type: processed_data, Data Amount: 2, Data Speed: 0.5

Timestamp: 1646782562.7201335, Source Script: script2.py, Destination Script: script3.py, Data Type: processed_data, Data Amount: 2, Data Speed: 0.4

```

In this example:

- The summary of script connections provides information about the complexity levels and connections between scripts.

- The data flow summary details the input and output data for each script.

- The data board logs information about the processed data, including the source and destination scripts involved in the data flow, along with other relevant data information such as data amount, speed, and timestamp.

This comprehensive output provides insights into the script connections, data flow, and the performance of data processing, including source and destination script information.import time

class DataBoard:

def __init__(self):

self.data_logs = []

def log_data(self, source_script, destination_script, data_type, data_amount, data_speed, timestamp):

self.data_logs.append({

'source_script': source_script,

'destination_script': destination_script,

'data_type': data_type,

'data_amount': data_amount,

'data_speed': data_speed,

'timestamp': timestamp

})

def analyze_data_flow(summary, script_data):

# Analyze the data flow and identify potential bottlenecks or critical points

analysis_results = []

for item in summary:

script_name = item['Script']

connections = item['Connections']

for other_script, _ in connections.items():

# Check if there's a significant change in data amount or speed between scripts

if 'output_data' in script_data[script_name] and 'input_data' in script_data[other_script]:

output_data_amount = len(script_data[script_name]['output_data'])

input_data_amount = len(script_data[other_script]['input_data'].split('\n'))

output_timestamp = time.time() # Replace with actual timestamp

input_timestamp = time.time() # Replace with actual timestamp

data_speed = (output_data_amount - input_data_amount) / (output_timestamp - input_timestamp)

if data_speed > 0: # Ensure data_speed is positive to avoid division by zero

analysis_results.append({

'source_script': script_name,

'destination_script': other_script,

'data_speed': data_speed,

'timestamp': output_timestamp

})

return analysis_results

def main():

# Provide the path to the folder containing the scripts

folder_path = '/path/to/scripts/folder'

# Summarize the connections between scripts based on stdin and stdout

summary, script_data = summarize_connections(folder_path)

# Analyze the data flow

analysis_results = analyze_data_flow(summary, script_data)

# Print the summary

print_connections_summary(summary)

print_data_flow_summary(script_data)

# Generate the data board

data_board = DataBoard()

for result in analysis_results:

data_board.log_data(result['source_script'], result['destination_script'], 'processed_data', result['data_speed'], len(script_data[result['source_script']]['output_data']), result['timestamp'])

# Print the data board

print("\nData Board:")

for log in data_board.data_logs:

print(f"Timestamp: {log['timestamp']}, Source Script: {log['source_script']}, Destination Script: {log['destination_script']}, Data Type: {log['data_type']}, Data Amount: {log['data_amount']}, Data Speed: {log['data_speed']}")

if __name__ == "__main__":

main()

https://chat.openai.com/share/cfa1f8f9-bec0-442a-ad0b-08475562e186

Certainly! You can create a main script that manages all the sub-scripts for trading, character behavior, and skill generation. Here's an example of how you can structure such a script:

```gdscript

# MainScript.gd

extends Node

# Import sub-scripts

const Skill = preload("res://Skill.gd")

const Item = preload("res://Item.gd")

# Character properties

class Character:

var name : String

var skills : Array

var inventory : Array

var currency : int

var experience : int

var level : int

func _init(name: String):

self.name = name

self.skills = []

self.inventory = []

self.currency = 0

self.experience = 0

self.level = 1

# Initialize characters

var character1 = Character.new("Character1")

var character2 = Character.new("Character2")

# Initialize skills and items

func _ready():

generate_random_skills(character1, 3)

generate_random_skills(character2, 3)

generate_random_items(character1, 5)

generate_random_items(character2, 5)

# Generate random skills for a character

func generate_random_skills(character: Character, num_skills: int):

for i in range(num_skills):

var skill = Skill.new("Skill" + str(i), SkillType(randi() % SkillType.size()), randf() * 20)

character.skills.append(skill)

# Generate random items for a character

func generate_random_items(character: Character, num_items: int):

for i in range(num_items):

var item = Item.new("Item" + str(i), randi() % 50 + 10, ItemType(randi() % ItemType.size()))

character.inventory.append(item)

# Trade between characters

func trade(char1: Character, char2: Character, offered_items: Array, requested_items: Array):

char1.trade(char2, offered_items, requested_items)

# Perform character interactions

func perform_interactions():

# Example: Character interaction

var offered_items = [character1.inventory[0]]

var requested_items = [character2.inventory[0]]

trade(character1, character2, offered_items, requested_items)

# Update characters based on experience

func update_characters():

# Example: Gain experience for characters

character1.gain_experience(50)

character2.gain_experience(30)

# Main loop

func _process(delta):

perform_interactions()

update_characters()

```

In this main script:

- We import the sub-scripts for `Skill` and `Item`.

- We define a `Character` class to manage character properties and methods.

- We initialize characters (`character1` and `character2`) and generate random skills and items for them.

- We define functions for trading between characters, performing interactions, and updating characters based on experience.

- We implement a main loop where interactions and character updates occur.

You can attach this script to a node in your Godot scene and run your game. Adjust the specifics to match your game's mechanics and design.Certainly! Implementing a trading and negotiation system based on past experiences adds depth to your game's economy and character interactions. Below, I'll outline a basic example of how you can achieve this in Godot using GDScript.

### Step 1: Define Item Class

First, let's create a class to represent items that can be traded. Each item will have properties such as name, value, and type.

```gdscript

# Item.gd

extends Resource

enum ItemType { WEAPON, ARMOR, CONSUMABLE }

class_name Item

var name : String

var value : int

var type : ItemType

func _init(name: String, value: int, type: ItemType):

self.name = name

self.value = value

self.type = type

```

### Step 2: Implement Trading and Negotiation

Next, let's create a system for characters to trade items with each other and negotiate prices based on past experiences.

```gdscript

# Character.gd

extends Node

var inventory = []

var currency = 0

# Trading function

func trade(other_character: Node, offered_items: Array, requested_items: Array):

# Calculate total value of offered and requested items

var offered_value = calculate_items_value(offered_items)

var requested_value = calculate_items_value(requested_items)

# Check if the trade is fair based on past experiences

var fair_trade = negotiate_trade(other_character, offered_value, requested_value)

if fair_trade:

# Transfer items and currency

transfer_items(other_character, offered_items, requested_items)

transfer_currency(other_character, requested_value - offered_value)

other_character.transfer_currency(self, offered_value - requested_value)

else:

# Negotiation failed

print("Negotiation failed!")

# Calculate total value of items

func calculate_items_value(items: Array) -> int:

var total_value = 0

for item in items:

total_value += item.value

return total_value

# Negotiate trade based on past experiences

func negotiate_trade(other_character: Node, offered_value: int, requested_value: int) -> bool:

# Example: Perform negotiation based on relationship level between characters

var relationship_level = 0

if relationship_level >= 0: # Good relationship

return true

else: # Poor relationship

return offered_value >= requested_value * 0.9 # Accept if offered value is at least 90% of requested value

# Transfer items between characters

func transfer_items(other_character: Node, offered_items: Array, requested_items: Array):

# Remove offered items from self's inventory and add them to other_character's inventory

for item in offered_items:

if inventory.has(item):

inventory.erase(item)

other_character.inventory.append(item)

# Remove requested items from other_character's inventory and add them to self's inventory

for item in requested_items:

if other_character.inventory.has(item):

other_character.inventory.erase(item)

inventory.append(item)

# Transfer currency between characters

func transfer_currency(other_character: Node, amount: int):

if currency >= amount:

currency -= amount

other_character.currency += amount

```

### Step 3: Use Trading System

Now, you can use the trading system in your game environment. For example, you can call the `trade` function when characters interact with each other and attempt to trade items.

```gdscript

# Environment.gd

extends Node

func _ready():

# Example: Character interactions

var character1 = $Character1

var character2 = $Character2

var offered_items = [Item.new("Sword", 50, ItemType.WEAPON)]

var requested_items = [Item.new("Shield", 40, ItemType.ARMOR)]

character1.trade(character2, offered_items, requested_items)

```

By implementing these steps, you create a trading and negotiation system where characters can exchange items and negotiate prices based on their past experiences and relationship levels. Adjust the specifics to match your game's mechanics and design.Certainly! Implementing individual, randomly generated skills for characters that can evolve and have various functions is an exciting addition. Below, I'll provide an example of how you can create such a system in Godot using GDScript.

### Step 1: Define Skill Class

First, let's create a GDScript for the skill class. Each skill will have properties such as name, type (e.g., damage, healing), and power.

```gdscript

# Skill.gd

extends Resource

# Skill types

enum SkillType { DAMAGE, HEAL, UTILITY }

class_name Skill

# Properties of a skill

var name : String

var type : SkillType

var power : float

func _init(name: String, type: SkillType, power: float):

self.name = name

self.type = type

self.power = power

```

### Step 2: Generate Random Skills

Next, let's create a function to generate random skills for characters. You can customize this function to suit your game's needs.

```gdscript

# Character.gd

extends Node

var skills = []

func generate_random_skills(num_skills: int):

# List of possible skill names, types, and power ranges

var possible_skills = [

{ "name": "Attack", "type": SkillType.DAMAGE, "min_power": 10, "max_power": 20 },

{ "name": "Healing", "type": SkillType.HEAL, "min_power": 5, "max_power": 15 },

{ "name": "Utility", "type": SkillType.UTILITY, "min_power": 1, "max_power": 10 }

]

# Generate random skills

for i in range(num_skills):

var skill_data = possible_skills[randi() % possible_skills.size()]

var name = skill_data["name"]

var type = skill_data["type"]

var power = randf() * (skill_data["max_power"] - skill_data["min_power"]) + skill_data["min_power"]

var skill = Skill.new(name, type, power)

skills.append(skill)

```

### Step 3: Use Skills in Environment

Once the characters have their skills, you can use them in various ways within your game environment. For example, you can have battles where characters use their skills to damage opponents or heal themselves.

```gdscript

# Environment.gd

extends Node

func battle(character1: Node, character2: Node):

# Assume characters have a "skills" property containing their skills

var attacker_skills = character1.skills

var defender_skills = character2.skills

# Select a random skill for the attacker

var attacker_skill = attacker_skills[randi() % attacker_skills.size()]

# Apply skill effects based on skill type

match attacker_skill.type:

SkillType.DAMAGE:

character2.apply_damage(attacker_skill.power)

SkillType.HEAL:

character1.apply_healing(attacker_skill.power)

SkillType.UTILITY:

# Apply utility effect

pass

```

### Step 4: Evolving Skills

To allow skills to evolve over time, you can implement a system where characters gain experience points (XP) from battles and level up, unlocking new skills or improving existing ones.

```gdscript

# Character.gd

var experience = 0

var level = 1

func gain_experience(amount: int):

experience += amount

if experience >= next_level_experience():

level_up()

func next_level_experience() -> int:

return level * 100

func level_up():

level += 1

generate_random_skills(2) # Example: Generate 2 new skills on level up

```

By implementing these steps, you can create characters with individual, randomly generated skills that evolve over time based on their experiences in the game environment. Adjust the specifics to match your game's mechanics and design.Implementing adaptive and evolving behavior based on learned experience is a complex task that often involves machine learning techniques, such as reinforcement learning or neural networks. However, I can provide a simplified example of how you can simulate adaptive behavior in Godot using basic principles of state-based learning.

In this example, I'll create a simple system where the character learns from its past experiences and adjusts its behavior accordingly. We'll use a basic Q-learning approach to demonstrate this concept.

```gdscript

extends KinematicBody

var speed = 5

var gravity = -20

var velocity = Vector3()

# List of possible behaviors

var behaviors = ["Idle", "Walk", "Jump", "Run", "Attack", "Defend"]

# Q-values for each state-action pair

var q_values = {}

# Parameters for Q-learning

var learning_rate = 0.1

var discount_factor = 0.9

var exploration_rate = 0.3

func _ready():

# Initialize Q-values

initialize_q_values()

# Start with a random behavior

change_behavior()

func _physics_process(delta):

# Apply gravity

velocity.y += gravity * delta

# Execute current behavior

match current_behavior:

"Idle":

# Do nothing

pass

"Walk":

# Move in a random direction

move_random_direction()

"Jump":

# Jump

jump()

"Run":

# Move faster in a random direction

move_random_direction(speed * 2)

"Attack":

# Perform an attack action

attack()

"Defend":

# Perform a defensive action

defend()

# Move the character

velocity = move_and_slide(velocity, Vector3.UP)

# Initialize Q-values

func initialize_q_values():

for behavior in behaviors:

q_values[behavior] = {}

for action in behaviors:

q_values[behavior][action] = 0.0

# Change the character's behavior based on Q-values

func change_behavior():

# Select the action with the highest Q-value (exploitation)

var max_q_value = -INFINITY

var best_action = ""

for action in q_values[current_behavior].keys():

if q_values[current_behavior][action] > max_q_value:

max_q_value = q_values[current_behavior][action]

best_action = action

# Explore with probability exploration_rate

if randf() < exploration_rate:

best_action = behaviors[randi() % behaviors.size()]

current_behavior = best_action

# Move in a random direction

func move_random_direction(custom_speed = speed):

var direction = Vector3(rand_range(-1, 1), 0, rand_range(-1, 1)).normalized()

velocity.x = direction.x * custom_speed

velocity.z = direction.z * custom_speed

# Jump

func jump():

if is_on_floor():

velocity.y = sqrt(-2 * gravity * 3) # Jump height

# Perform an attack action

func attack():

# Execute attack logic

pass

# Perform a defensive action

func defend():

# Execute defense logic

pass

# Update Q-values based on the reward received

func update_q_values(previous_behavior, reward):

# Select the action with the highest Q-value for the current state

var max_q_value = -INFINITY

for action in q_values[current_behavior].keys():

if q_values[current_behavior][action] > max_q_value:

max_q_value = q_values[current_behavior][action]

# Update Q-value for the previous state-action pair

q_values[previous_behavior][current_behavior] += learning_rate * (reward + discount_factor * max_q_value - q_values[previous_behavior][current_behavior])

```

In this script:

- We define a set of possible behaviors for the character.

- We initialize Q-values for each state-action pair, where the state is the current behavior and the action is the next behavior to take.

- In the `_ready` function, the character starts with a random behavior.

- During each `_physics_process`, the character executes its current behavior and moves accordingly.

- After executing an action, the character receives a reward based on the success or failure of the action.

- After receiving a reward, the Q-values are updated using the Q-learning formula.

- The character selects its next behavior based on the highest Q-value for the current state (exploitation), with a probability of exploration_rate for exploration.

This is a basic example to demonstrate the concept of adaptive behavior using Q-learning. In a real-world scenario, you would need to refine this approach and consider factors such as state representation, reward structure, exploration vs. exploitation trade-off, and convergence criteria. Additionally, you may explore more advanced machine learning techniques for more complex adaptive behaviors.